This post gives the highlights of upgrading grid infrastructure of a two node RAC from 11.2.0.4 to 12.2.0.1. There's an earlier post of

upgrading GI from 11.2.0.4 to 12.1.0.2.

When upgrading to Oracle Grid Infrastructure 12c Release 2 (12.2), the upgrade is to an Oracle Standalone Cluster configuration with Flex cluster option. As mentioned in

12.2 RAC install,

GNS no longer necessary to have flex cluster. If OCR and vote disks are stored in non-ASM then these need to be migrated to ASM before the upgrade to 12.2.

1. Before the GI upgrade backup the OCR manually. This could be used in downgrading the GI from 12.2.0.1 to 11.2.0.4 later on.

2. The RAC has been setup with role separation. This requires certain directories having permission so that both grid user and oracle user is able to write and read to them. Make cfgtoollogs writable to both grid and oracle, usually this is writable only for one user and group permission is only read and executable. Without write permission on this directory following error could be seen when upgrading GI.

WARNING: [Apr 12, 2017 3:20:13 PM] Skipping line: [FATAL] [DBT-00007] User does not have the appropiate write privileges.

INFO: [Apr 12, 2017 3:20:13 PM] End of argument passing to stdin

INFO: [Apr 12, 2017 3:20:13 PM] Read: CAUSE: User does not have the required write privileges on "/opt/app/oracle/cfgtoollogs/dbca" .

INFO: [Apr 12, 2017 3:20:13 PM] CAUSE: User does not have the required write privileges on "/opt/app/oracle/cfgtoollogs/dbca" .

WARNING: [Apr 12, 2017 3:20:13 PM] Skipping line: CAUSE: User does not have the required write privileges on "/opt/app/oracle/cfgtoollogs/dbca" .

chmod 775 $ORACLE_BASE/cfgtoollogs

Another directory requires write permission for grid user is the dbca inside cfgtoollogs.

chmod 770 $ORACLE_BASE/cfgtoollogs/dbca

As the GI management repository is a database the admin directory is another directory that requires write permission for grid user. Without this permission creation of GI management repository will fail with following

INFO: [Apr 12, 2017 3:27:01 PM] Read: [FATAL] [DBT-06608] The specified audit file destination (/opt/app/oracle/admin/_mgmtdb/adump) is not writable.

INFO: [Apr 12, 2017 3:27:01 PM] [FATAL] [DBT-06608] The specified audit file destination (/opt/app/oracle/admin/_mgmtdb/adump) is not writable.

WARNING: [Apr 12, 2017 3:27:01 PM] Skipping line: [FATAL] [DBT-06608] The specified audit file destination (/opt/app/oracle/admin/_mgmtdb/adump) is not writable.

chmod 770 $ORACLE_BASE/admin

These permissions must be set on all the nodes to avoid above error when MGMTDB migrates from node to node during rolling restarts.

3. Once upgraded the GI would be a standalone flex cluster. But this doesn't require setting up

GNS (confirmed by an Oracle SR) before the upgrade. It was similar for 12.2 standalone cluster new installation. As with other 12c upgrades the OCR and vote disks must be moved to ASM before the upgrade. Also 12.2 is supported on RHEL 6.4 or above. So if the OS is a RHEL 5 then OS upgrade would be required.

4. The current versions of the GI are

[grid@rhel6m1 ~]$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [11.2.0.4.0]

[grid@rhel6m1 ~]$ crsctl query crs releaseversion

Oracle High Availability Services release version on the local node is [11.2.0.4.0]

[grid@rhel6m1 ~]$ crsctl query crs softwareversion -all

Oracle Clusterware version on node [rhel6m1] is [11.2.0.4.0]

Oracle Clusterware version on node [rhel6m2] is [11.2.0.4.0]

5. Check upgrade readiness by running orachk

./orachk -u -o pre

The GI home must be patched to at least

PSU 11.2.0.4.161018 before the upgrade. This will be checked during the upgrade readiness. The GI home had PSU later than this applied on the GI home. In-spite of the this following patches were flagged as needed.

WARNING => One or more dependent start or stop resources are missing from the OCR.

WARNING => system disk group does not have enough free space to create management database

WARNING => Oracle patch 19855835 is not applied on RDBMS_HOME /opt/app/oracle/product/11.2.0/dbhome_4

WARNING => Oracle patch 20898997 is not applied on RDBMS_HOME /opt/app/oracle/product/11.2.0/dbhome_4

WARNING => Oracle patch 20348910 is not applied on RDBMS_HOME /opt/app/oracle/product/11.2.0/dbhome_4

WARNING => Oracle Patch 23186035 is not applied on RDBMS home /opt/app/oracle/product/11.2.0/dbhome_4

The dependency warning is due to GNS dependency on the database resouce, even though this GI setup didn't have any GNS resource.

ora.scan2.vip = FOUND

ora.ons = FOUND

ora.scan3.vip = FOUND

ora.net1.network = FOUND

ora.gns = MISSING

ora.DATA.dg = FOUND

ora.FLASH.dg = FOUND

ora.scan1.vip = FOUND

START_DEPENDENCIES=hard(ora.DATA.dg,ora.FLASH.dg) weak(type:ora.listener.type,global:type:ora.scan_listener.type,uniform:ora.ons,

global:ora.gns) pullup(ora.DATA.dg,ora.FLASH.dg)

This warning could be resolved by removing the GNS dependency

$ srvctl stop database -d std11g2

# crsctl modify resource ora.std11g2.db -attr "START_DEPENDENCIES='hard(ora.DATA.dg,ora.FLASH.dg) weak(type:ora.listener.type,global:type:ora.scan_listener.type,uniform:ora.ons) pullup(ora.DATA.dg,ora.FLASH.dg)'"

START_DEPENDENCIES=hard(ora.DATA.dg,ora.FLASH.dg) weak(type:ora.listener.type,global:type:ora.scan_listener.type,uniform:ora.ons) pullup(ora.DATA.dg,ora.FLASH.dg)

$ srvctl start database -d std11g2

Download the flagged patches from MOS and apply. Following is a short description of what each patch does

23186035 - This patch will ensure RDBMS Homes of lower version work with 12.2 GI Home.

20348910 - Will address ORA-22308 during upgrade / downgrade. - Patches to apply before upgrading Oracle GI and DB to 12.2.0.1 (Doc ID 2180188.1)

20898997 - Will address ORA-22308 / ORA-600 [8153] or similar during upgrade / downgrade.

19855835 - This is one of the top popular issues reported in 12.1.0.2. Applying this patch proactively would help avoiding issues related to slow upgrade if there is a large amount of data in the stats history tables.

The 11.2.0.4 GI setup had a 15G disk group (with 14G free) with normal redundancy for OCR and vote disks.

NAME TYPE V TOTAL_MB FREE_MB

------------------------------ ------ - ---------- ----------

CLUSTER_DG NORMAL Y 15342 14516

DATA EXTERN N 10236 5417

FLASH EXTERN N 10236 2547

This amount of free space is not sufficient for a 12.2 upgrade. The readiness report will give a warning as well and mentions

System diskgroup CLUSTER_DG has 14 GB of free space and it requires 35 of free space. - There is insufficient space available in the OCR/voting disk diskgroup to house the MGMTDB. Should you choose to create the MGMTDB during the upgrade process the creation will fail due to space constraints.

Please add space to the diskgroup. The Space requirement for the MGMTDB is 35 GB for clusters up to 4 nodes, for each node above 4 add 500MB/node.

However setting a 39GB disk group with normal redundancy would pass the orachk readiness test but actual GI upgrade would fail.

NAME TOTAL_MB FREE_MB

------------------------------ ---------- ----------

CLUSTER_DG 15342 14516

DATA 10236 5387

FLASH 10236 1503

CLUSTER_DG2 39933 39648

Following images shows error message that would be given during actual GI upgrade for two situations explained above.

As shown on the image the expected size for the disk group to host both OCR and MGMTDB is 66GB. However as per

GI install and upgrade document for a normal redundancy disk group the space requirement is 78GB (for standalone cluster). For external redundancy disk group the space requirement is 39GB. Two options are available in this case, if normal redundancy is preferred for both OCR and MGMTDB then create a disk group of at least 78GB. As part of the upgrade testing a 92GB normal redundancy disk group was created and upgraded succeed without issue.

NAME TOTAL_MB FREE_MB

------------------------------ ---------- ----------

CLUSTER_DG 15342 14612

DATA 10236 5387

FLASH 10236 1489

CLUSTER_DG3 92154 91230

However if there's space constraint then create a external redundancy disk group of 40GB.

NAME TOTAL_MB FREE_MB

------------------------------ ---------- ----------

CLUSTER_DG 15342 14711

DATA 10236 5163

FLASH 10236 1776

GIMR 40952 40902

Move the OCR and vote disk to it before the upgrade (for the duration of the upgrade OCR and Vote will be in external redundancy disk group). Once the upgrade complete move the OCR and vote disk back to normal redundancy disk group, leaving only the MGMTDB in the external redundancy disk group. It's valid to have these two in different disk group. In fact for a

12.2 fresh installations OUI presents the option to have a different disk group for MGMTDB.

6. Once GI upgrade readiness tasks are complete create out-of-place directories for GI home. Unzip the 12.2 GI binaries to the GI home. This only needs to be done in one of the nodes. During the upgrade process the software is copied and installed on all other nodes in the cluster.

$ unset ORACLE_BASE

$ unset ORACLE_HOME

$ unset ORACLE_SID

mkdir -p /opt/app/12.2.0/grid

cp ~/linuxx64_12201_grid_home.zip /opt/app/12.2.0/grid

cd /opt/app/12.2.0/grid

unzip linuxx64_12201_grid_home.zip

7. Run the cluvfy from the new GI home.

[grid@rhel6m1 grid]$ ./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /opt/app/11.2.0/grid4 -dest_crshome /opt/app/12.2.0/grid -dest_version 12.2.0.1.0 -fixup -verbose

Verifying Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 7.6863GB (8059712.0KB) 8GB (8388608.0KB) passed

rhel6m1 7.6863GB (8059712.0KB) 8GB (8388608.0KB) passed

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 6.3133GB (6620016.0KB) 50MB (51200.0KB) passed

rhel6m1 6.0682GB (6362944.0KB) 50MB (51200.0KB) passed

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 4GB (4194296.0KB) 7.6863GB (8059712.0KB) failed

rhel6m1 4GB (4194296.0KB) 7.6863GB (8059712.0KB) failed

Verifying Swap Size ...FAILED (PRVF-7573)

Verifying Free Space: rhel6m2:/usr,rhel6m2:/var,rhel6m2:/etc,rhel6m2:/sbin,rhel6m2:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr rhel6m2 / 15.415GB 25MB passed

/var rhel6m2 / 15.415GB 5MB passed

/etc rhel6m2 / 15.415GB 25MB passed

/sbin rhel6m2 / 15.415GB 10MB passed

/tmp rhel6m2 / 15.415GB 1GB passed

Verifying Free Space: rhel6m2:/usr,rhel6m2:/var,rhel6m2:/etc,rhel6m2:/sbin,rhel6m2:/tmp ...PASSED

Verifying Free Space: rhel6m1:/usr,rhel6m1:/var,rhel6m1:/etc,rhel6m1:/sbin,rhel6m1:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr rhel6m1 / 5.6611GB 25MB passed

/var rhel6m1 / 5.6611GB 5MB passed

/etc rhel6m1 / 5.6611GB 25MB passed

/sbin rhel6m1 / 5.6611GB 10MB passed

/tmp rhel6m1 / 5.6611GB 1GB passed

Verifying Free Space: rhel6m1:/usr,rhel6m1:/var,rhel6m1:/etc,rhel6m1:/sbin,rhel6m1:/tmp ...PASSED

Verifying User Existence: grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed exists(502)

rhel6m1 passed exists(502)

Verifying Users With Same UID: 502 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed exists

rhel6m1 passed exists

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: asmoper ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed exists

rhel6m1 passed exists

Verifying Group Existence: asmoper ...PASSED

Verifying Group Existence: asmdba ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed exists

rhel6m1 passed exists

Verifying Group Existence: asmdba ...PASSED

Verifying Group Existence: oinstall ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed exists

rhel6m1 passed exists

Verifying Group Existence: oinstall ...PASSED

Verifying Group Membership: asmdba ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 yes yes yes passed

rhel6m1 yes yes yes passed

Verifying Group Membership: asmdba ...PASSED

Verifying Group Membership: asmadmin ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 yes yes yes passed

rhel6m1 yes yes yes passed

Verifying Group Membership: asmadmin ...PASSED

Verifying Group Membership: oinstall(Primary) ...

Node Name User Exists Group Exists User in Group Primary Status

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m2 yes yes yes yes passed

rhel6m1 yes yes yes yes passed

Verifying Group Membership: oinstall(Primary) ...PASSED

Verifying Group Membership: asmoper ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 yes yes yes passed

rhel6m1 yes yes yes passed

Verifying Group Membership: asmoper ...PASSED

Verifying Run Level ...

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 3 3,5 passed

rhel6m1 3 3,5 passed

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 hard 65536 65536 passed

rhel6m1 hard 65536 65536 passed

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 soft 1024 1024 passed

rhel6m1 soft 1024 1024 passed

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 hard 16384 16384 passed

rhel6m1 hard 16384 16384 passed

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 soft 2047 2047 passed

rhel6m1 soft 2047 2047 passed

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rhel6m2 soft 10240 10240 passed

rhel6m1 soft 10240 10240 passed

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Oracle patch:17617807 ...

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

rhel6m1 17617807 17617807 passed

rhel6m2 17617807 17617807 passed

Verifying Oracle patch:17617807 ...PASSED

Verifying Oracle patch:21255373 ...

Node Name Applied Required Comment

------------ ------------------------ ------------------------ ----------

rhel6m1 21255373 21255373 passed

rhel6m2 21255373 21255373 passed

Verifying Oracle patch:21255373 ...PASSED

Verifying This test checks that the source home "/opt/app/11.2.0/grid4" is suitable for upgrading to version "12.2.0.1.0". ...PASSED

Verifying Architecture ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 x86_64 x86_64 passed

rhel6m1 x86_64 x86_64 passed

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 2.6.32-358.el6.x86_64 2.6.32 passed

rhel6m1 2.6.32-358.el6.x86_64 2.6.32 passed

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 3010 3010 250 passed

rhel6m2 3010 3010 250 passed

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 385280 385280 32000 passed

rhel6m2 385280 385280 32000 passed

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 3010 3010 100 passed

rhel6m2 3010 3010 100 passed

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 128 128 128 passed

rhel6m2 128 128 128 passed

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 68719476736 68719476736 4126572544 passed

rhel6m2 68719476736 68719476736 4126572544 passed

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 4096 4096 4096 passed

rhel6m2 4096 4096 4096 passed

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 4294967296 4294967296 805971 passed

rhel6m2 4294967296 4294967296 805971 passed

Verifying OS Kernel Parameter: shmall ...PASSED

Verifying OS Kernel Parameter: file-max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 6815744 6815744 6815744 passed

rhel6m2 6815744 6815744 6815744 passed

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: ip_local_port_range ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

rhel6m2 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

Verifying OS Kernel Parameter: ip_local_port_range ...PASSED

Verifying OS Kernel Parameter: rmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 4194304 4194304 262144 passed

rhel6m2 4194304 4194304 262144 passed

Verifying OS Kernel Parameter: rmem_default ...PASSED

Verifying OS Kernel Parameter: rmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 4194304 4194304 4194304 passed

rhel6m2 4194304 4194304 4194304 passed

Verifying OS Kernel Parameter: rmem_max ...PASSED

Verifying OS Kernel Parameter: wmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 1048576 1048576 262144 passed

rhel6m2 1048576 1048576 262144 passed

Verifying OS Kernel Parameter: wmem_default ...PASSED

Verifying OS Kernel Parameter: wmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 2097152 2097152 1048576 passed

rhel6m2 2097152 2097152 1048576 passed

Verifying OS Kernel Parameter: wmem_max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 3145728 3145728 1048576 passed

rhel6m2 3145728 3145728 1048576 passed

Verifying OS Kernel Parameter: aio-max-nr ...PASSED

Verifying OS Kernel Parameter: panic_on_oops ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rhel6m1 1 undefined 1 passed

rhel6m2 1 undefined 1 passed

Verifying OS Kernel Parameter: panic_on_oops ...PASSED

Verifying Package: binutils-2.20.51.0.2 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 binutils-2.20.51.0.2-5.36.el6 binutils-2.20.51.0.2 passed

rhel6m1 binutils-2.20.51.0.2-5.36.el6 binutils-2.20.51.0.2 passed

Verifying Package: binutils-2.20.51.0.2 ...PASSED

Verifying Package: compat-libcap1-1.10 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 compat-libcap1-1.10-1 compat-libcap1-1.10 passed

rhel6m1 compat-libcap1-1.10-1 compat-libcap1-1.10 passed

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: compat-libstdc++-33-3.2.3 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

rhel6m1 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

Verifying Package: compat-libstdc++-33-3.2.3 (x86_64) ...PASSED

Verifying Package: libgcc-4.4.7 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 libgcc(x86_64)-4.4.7-3.el6 libgcc(x86_64)-4.4.7 passed

rhel6m1 libgcc(x86_64)-4.4.7-3.el6 libgcc(x86_64)-4.4.7 passed

Verifying Package: libgcc-4.4.7 (x86_64) ...PASSED

Verifying Package: libstdc++-4.4.7 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 libstdc++(x86_64)-4.4.7-3.el6 libstdc++(x86_64)-4.4.7 passed

rhel6m1 libstdc++(x86_64)-4.4.7-3.el6 libstdc++(x86_64)-4.4.7 passed

Verifying Package: libstdc++-4.4.7 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.4.7 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 libstdc++-devel(x86_64)-4.4.7-3.el6 libstdc++-devel(x86_64)-4.4.7 passed

rhel6m1 libstdc++-devel(x86_64)-4.4.7-3.el6 libstdc++-devel(x86_64)-4.4.7 passed

Verifying Package: libstdc++-devel-4.4.7 (x86_64) ...PASSED

Verifying Package: sysstat-9.0.4 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 sysstat-9.0.4-20.el6 sysstat-9.0.4 passed

rhel6m1 sysstat-9.0.4-20.el6 sysstat-9.0.4 passed

Verifying Package: sysstat-9.0.4 ...PASSED

Verifying Package: gcc-4.4.7 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 gcc-4.4.7-3.el6 gcc-4.4.7 passed

rhel6m1 gcc-4.4.7-3.el6 gcc-4.4.7 passed

Verifying Package: gcc-4.4.7 ...PASSED

Verifying Package: gcc-c++-4.4.7 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 gcc-c++-4.4.7-3.el6 gcc-c++-4.4.7 passed

rhel6m1 gcc-c++-4.4.7-3.el6 gcc-c++-4.4.7 passed

Verifying Package: gcc-c++-4.4.7 ...PASSED

Verifying Package: ksh ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 ksh ksh passed

rhel6m1 ksh ksh passed

Verifying Package: ksh ...PASSED

Verifying Package: make-3.81 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 make-3.81-20.el6 make-3.81 passed

rhel6m1 make-3.81-20.el6 make-3.81 passed

Verifying Package: make-3.81 ...PASSED

Verifying Package: glibc-2.12 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 glibc(x86_64)-2.12-1.107.el6 glibc(x86_64)-2.12 passed

rhel6m1 glibc(x86_64)-2.12-1.107.el6 glibc(x86_64)-2.12 passed

Verifying Package: glibc-2.12 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.12 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 glibc-devel(x86_64)-2.12-1.107.el6 glibc-devel(x86_64)-2.12 passed

rhel6m1 glibc-devel(x86_64)-2.12-1.107.el6 glibc-devel(x86_64)-2.12 passed

Verifying Package: glibc-devel-2.12 (x86_64) ...PASSED

Verifying Package: libaio-0.3.107 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.107 passed

rhel6m1 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.107 passed

Verifying Package: libaio-0.3.107 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.107 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.107 passed

rhel6m1 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.107 passed

Verifying Package: libaio-devel-0.3.107 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 nfs-utils-1.2.3-36.el6 nfs-utils-1.2.3-15 passed

rhel6m1 nfs-utils-1.2.3-36.el6 nfs-utils-1.2.3-15 passed

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-5.43-1 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 smartmontools-5.43-1.el6 smartmontools-5.43-1 passed

rhel6m1 smartmontools-5.43-1.el6 smartmontools-5.43-1 passed

Verifying Package: smartmontools-5.43-1 ...PASSED

Verifying Package: net-tools-1.60-110 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 net-tools-1.60-110.el6_2 net-tools-1.60-110 passed

rhel6m1 net-tools-1.60-110.el6_2 net-tools-1.60-110 passed

Verifying Package: net-tools-1.60-110 ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...

Node Name Status

------------------------------------ ------------------------

rhel6m2 passed

rhel6m1 passed

Verifying Root user consistency ...PASSED

Verifying selectivity of ASM discovery string ...PASSED

Verifying ASM spare parameters ...PASSED

Verifying Disk group ASM compatibility setting ...PASSED

Verifying Package: cvuqdisk-1.0.10-1 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rhel6m2 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed

rhel6m1 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...

Node Name Status

------------------------------------ ------------------------

rhel6m1 passed

rhel6m2 passed

Verifying Hosts File ...PASSED

Interface information for node "rhel6m2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth0 192.168.0.94 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:7E:61:A9 1500

eth0 192.168.0.98 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:7E:61:A9 1500

eth0 192.168.0.125 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:7E:61:A9 1500

eth1 192.168.1.88 192.168.1.0 0.0.0.0 192.168.0.100 08:00:27:69:2C:B6 1500

Interface information for node "rhel6m1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth0 192.168.0.93 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:AC:F3:CC 1500

eth0 192.168.0.135 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:AC:F3:CC 1500

eth0 192.168.0.145 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:AC:F3:CC 1500

eth0 192.168.0.97 192.168.0.0 0.0.0.0 192.168.0.100 08:00:27:AC:F3:CC 1500

eth1 192.168.1.87 192.168.1.0 0.0.0.0 192.168.0.100 08:00:27:A3:C4:6F 1500

Check: MTU consistency on the private interfaces of subnet "192.168.1.0"

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rhel6m2 eth1 192.168.1.88 192.168.1.0 1500

rhel6m1 eth1 192.168.1.87 192.168.1.0 1500

Check: MTU consistency of the subnet "192.168.0.0".

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rhel6m2 eth0 192.168.0.94 192.168.0.0 1500

rhel6m2 eth0 192.168.0.98 192.168.0.0 1500

rhel6m2 eth0 192.168.0.125 192.168.0.0 1500

rhel6m1 eth0 192.168.0.93 192.168.0.0 1500

rhel6m1 eth0 192.168.0.135 192.168.0.0 1500

rhel6m1 eth0 192.168.0.145 192.168.0.0 1500

rhel6m1 eth0 192.168.0.97 192.168.0.0 1500

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rhel6m1[eth1:192.168.1.87] rhel6m2[eth1:192.168.1.88] yes

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rhel6m1[eth0:192.168.0.93] rhel6m2[eth0:192.168.0.98] yes

rhel6m1[eth0:192.168.0.93] rhel6m2[eth0:192.168.0.125] yes

rhel6m1[eth0:192.168.0.93] rhel6m2[eth0:192.168.0.94] yes

rhel6m1[eth0:192.168.0.93] rhel6m1[eth0:192.168.0.135] yes

rhel6m1[eth0:192.168.0.93] rhel6m1[eth0:192.168.0.145] yes

rhel6m1[eth0:192.168.0.93] rhel6m1[eth0:192.168.0.97] yes

rhel6m2[eth0:192.168.0.98] rhel6m2[eth0:192.168.0.125] yes

rhel6m2[eth0:192.168.0.98] rhel6m2[eth0:192.168.0.94] yes

rhel6m2[eth0:192.168.0.98] rhel6m1[eth0:192.168.0.135] yes

rhel6m2[eth0:192.168.0.98] rhel6m1[eth0:192.168.0.145] yes

rhel6m2[eth0:192.168.0.98] rhel6m1[eth0:192.168.0.97] yes

rhel6m2[eth0:192.168.0.125] rhel6m2[eth0:192.168.0.94] yes

rhel6m2[eth0:192.168.0.125] rhel6m1[eth0:192.168.0.135] yes

rhel6m2[eth0:192.168.0.125] rhel6m1[eth0:192.168.0.145] yes

rhel6m2[eth0:192.168.0.125] rhel6m1[eth0:192.168.0.97] yes

rhel6m2[eth0:192.168.0.94] rhel6m1[eth0:192.168.0.135] yes

rhel6m2[eth0:192.168.0.94] rhel6m1[eth0:192.168.0.145] yes

rhel6m2[eth0:192.168.0.94] rhel6m1[eth0:192.168.0.97] yes

rhel6m1[eth0:192.168.0.135] rhel6m1[eth0:192.168.0.145] yes

rhel6m1[eth0:192.168.0.135] rhel6m1[eth0:192.168.0.97] yes

rhel6m1[eth0:192.168.0.145] rhel6m1[eth0:192.168.0.97] yes

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.1.0" ...PASSED

Verifying subnet mask consistency for subnet "192.168.0.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast check ...

Checking subnet "192.168.1.0" for multicast communication with multicast group "224.0.0.251"

Verifying Multicast check ...PASSED

Verifying OCR Integrity ...PASSED

Verifying Network Time Protocol (NTP) ...

Verifying '/etc/ntp.conf' ...

Node Name File exists?

------------------------------------ ------------------------

rhel6m2 no

rhel6m1 no

Verifying '/etc/ntp.conf' ...PASSED

Verifying '/var/run/ntpd.pid' ...

Node Name File exists?

------------------------------------ ------------------------

rhel6m2 no

rhel6m1 no

Verifying '/var/run/ntpd.pid' ...PASSED

Verifying Network Time Protocol (NTP) ...PASSED

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

rhel6m2 0022 0022 passed

rhel6m1 0022 0022 passed

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rhel6m2 passed does not exist

rhel6m1 passed does not exist

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying VIP Subnet configuration check ...PASSED

Verifying Voting Disk ...PASSED

Verifying resolv.conf Integrity ...

Verifying (Linux) resolv.conf Integrity ...

Node Name Status

------------------------------------ ------------------------

rhel6m1 passed

rhel6m2 passed

checking response for name "rhel6m2" from each of the name servers specified

in "/etc/resolv.conf"

Node Name Source Comment Status

------------ ------------------------ ------------------------ ----------

rhel6m2 192.168.0.66 IPv4 passed

rhel6m2 10.10.10.1 IPv4 passed

checking response for name "rhel6m1" from each of the name servers specified

in "/etc/resolv.conf"

Node Name Source Comment Status

------------ ------------------------ ------------------------ ----------

rhel6m1 192.168.0.66 IPv4 passed

rhel6m1 10.10.10.1 IPv4 passed

Verifying (Linux) resolv.conf Integrity ...PASSED

Verifying resolv.conf Integrity ...PASSED

Verifying DNS/NIS name service ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...

Node Name Configured Status

------------ ------------------------ ------------------------

rhel6m2 no passed

rhel6m1 no passed

Node Name Running? Status

------------ ------------------------ ------------------------

rhel6m2 no passed

rhel6m1 no passed

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...

Node Name Configured Status

------------ ------------------------ ------------------------

rhel6m2 no passed

rhel6m1 no passed

Node Name Running? Status

------------ ------------------------ ------------------------

rhel6m2 no passed

rhel6m1 no passed

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying loopback network interface address ...PASSED

Verifying Grid Infrastructure home path: /opt/app/12.2.0/grid ...

Verifying '/opt/app/12.2.0/grid' ...PASSED

Verifying Grid Infrastructure home path: /opt/app/12.2.0/grid ...PASSED

Verifying ACFS Driver Checks ...PASSED

Verifying Privileged group consistency for upgrade ...PASSED

Verifying CRS user Consistency for upgrade ...PASSED

Verifying Clusterware Version Consistency ...PASSED

Verifying Check incorrectly sized ASM Disks ...PASSED

Verifying that default ASM disk discovery string is in use ...PASSED

Verifying Network configuration consistency checks ...PASSED

Verifying File system mount options for path GI_HOME ...PASSED

Verifying /boot mount ...PASSED

Verifying OLR Integrity ...PASSED

Verifying Verify that the ASM instance was configured using an existing ASM parameter file. ...PASSED

Verifying User Equivalence ...PASSED

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Pre-check for cluster services setup was unsuccessful.

Checks did not pass for the following nodes:

rhel6m2,rhel6m1

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Swap Size ...FAILED

rhel6m2: PRVF-7573 : Sufficient swap size is not available on node "rhel6m2"

[Required = 7.6863GB (8059712.0KB) ; Found = 4GB (4194296.0KB)]

rhel6m1: PRVF-7573 : Sufficient swap size is not available on node "rhel6m1"

[Required = 7.6863GB (8059712.0KB) ; Found = 4GB (4194296.0KB)]

CVU operation performed: stage -pre crsinst

Date: Apr 11, 2017 10:28:40 AM

CVU home: /opt/app/12.2.0/grid/

User: gridThe failure is due to insufficient Swap size. On a test setup this is ignorable.

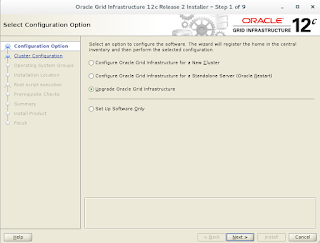

8. Run the gridSetup from the new GI home to begin the upgrade.

./gridSetup.sh

9. Run the rootupgrade.sh when prompted. Following output shows running rootupgrade on first node.

[root@rhel6m1 grid]# /opt/app/12.2.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /opt/app/12.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /opt/app/12.2.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/opt/app/oracle/crsdata/rhel6m1/crsconfig/rootcrs_rhel6m1_2017-04-12_02-31-52PM.log

2017/04/12 14:31:57 CLSRSC-595: Executing upgrade step 1 of 19: 'UpgradeTFA'.

2017/04/12 14:31:58 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2017/04/12 14:33:31 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector.

2017/04/12 14:33:31 CLSRSC-595: Executing upgrade step 2 of 19: 'ValidateEnv'.

2017/04/12 14:33:50 CLSRSC-595: Executing upgrade step 3 of 19: 'GenSiteGUIDs'.

2017/04/12 14:33:52 CLSRSC-595: Executing upgrade step 4 of 19: 'GetOldConfig'.

2017/04/12 14:33:52 CLSRSC-464: Starting retrieval of the cluster configuration data

2017/04/12 14:34:06 CLSRSC-515: Starting OCR manual backup.

2017/04/12 14:34:12 CLSRSC-516: OCR manual backup successful.

2017/04/12 14:34:36 CLSRSC-486:

At this stage of upgrade, the OCR has changed.

Any attempt to downgrade the cluster after this point will require a complete cluster outage to restore the OCR.

2017/04/12 14:34:37 CLSRSC-541:

To downgrade the cluster:

1. All nodes that have been upgraded must be downgraded.

2017/04/12 14:34:37 CLSRSC-542:

2. Before downgrading the last node, the Grid Infrastructure stack on all other cluster nodes must be down.

2017/04/12 14:34:37 CLSRSC-615:

3. The last node to downgrade cannot be a Leaf node.

2017/04/12 14:34:51 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2017/04/12 14:34:51 CLSRSC-595: Executing upgrade step 5 of 19: 'UpgPrechecks'.

2017/04/12 14:34:57 CLSRSC-363: User ignored prerequisites during installation

2017/04/12 14:35:14 CLSRSC-595: Executing upgrade step 6 of 19: 'SaveParamFile'.

2017/04/12 14:35:29 CLSRSC-595: Executing upgrade step 7 of 19: 'SetupOSD'.

2017/04/12 14:35:42 CLSRSC-595: Executing upgrade step 8 of 19: 'PreUpgrade'.

2017/04/12 14:35:49 CLSRSC-468: Setting Oracle Clusterware and ASM to rolling migration mode

2017/04/12 14:35:49 CLSRSC-482: Running command: '/opt/app/12.2.0/grid/bin/asmca -silent -upgradeNodeASM -nonRolling false -oldCRSHome /opt/app/11.2.0/grid4 -oldCRSVersion 11.2.0.4.0 -firstNode true -startRolling true '

ASM configuration upgraded in local node successfully.

2017/04/12 14:36:01 CLSRSC-469: Successfully set Oracle Clusterware and ASM to rolling migration mode

2017/04/12 14:36:19 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack

2017/04/12 14:39:40 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed.

2017/04/12 14:39:43 CLSRSC-595: Executing upgrade step 9 of 19: 'CheckCRSConfig'.

2017/04/12 14:39:44 CLSRSC-595: Executing upgrade step 10 of 19: 'UpgradeOLR'.

2017/04/12 14:39:55 CLSRSC-595: Executing upgrade step 11 of 19: 'ConfigCHMOS'.

2017/04/12 14:39:55 CLSRSC-595: Executing upgrade step 12 of 19: 'InstallAFD'.

2017/04/12 14:40:07 CLSRSC-595: Executing upgrade step 13 of 19: 'createOHASD'.

2017/04/12 14:40:18 CLSRSC-595: Executing upgrade step 14 of 19: 'ConfigOHASD'.

2017/04/12 14:40:34 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.conf'

2017/04/12 14:41:05 CLSRSC-595: Executing upgrade step 15 of 19: 'InstallACFS'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel6m1'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel6m1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2017/04/12 14:42:04 CLSRSC-595: Executing upgrade step 16 of 19: 'InstallKA'.

2017/04/12 14:42:15 CLSRSC-595: Executing upgrade step 17 of 19: 'UpgradeCluster'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel6m1'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel6m1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 11g Release 2.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.crsd' on 'rhel6m1'

CRS-2677: Stop of 'ora.crsd' on 'rhel6m1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.crf' on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.gpnpd' on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'rhel6m1'

CRS-2677: Stop of 'ora.drivers.acfs' on 'rhel6m1' succeeded

CRS-2677: Stop of 'ora.crf' on 'rhel6m1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'rhel6m1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'rhel6m1' succeeded

CRS-2677: Stop of 'ora.asm' on 'rhel6m1' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rhel6m1'

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rhel6m1' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'rhel6m1'

CRS-2673: Attempting to stop 'ora.evmd' on 'rhel6m1'

CRS-2677: Stop of 'ora.ctssd' on 'rhel6m1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'rhel6m1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rhel6m1'

CRS-2677: Stop of 'ora.cssd' on 'rhel6m1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'rhel6m1'

CRS-2677: Stop of 'ora.gipcd' on 'rhel6m1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel6m1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2017/04/12 14:56:43 CLSRSC-343: Successfully started Oracle Clusterware stack

2017/04/12 14:56:43 CLSRSC-595: Executing upgrade step 18 of 19: 'UpgradeNode'.

2017/04/12 14:56:50 CLSRSC-474: Initiating upgrade of resource types

2017/04/12 14:58:44 CLSRSC-482: Running command: 'srvctl upgrade model -s 11.2.0.4.0 -d 12.2.0.1.0 -p first'

2017/04/12 14:58:44 CLSRSC-475: Upgrade of resource types successfully initiated.

2017/04/12 14:59:05 CLSRSC-595: Executing upgrade step 19 of 19: 'PostUpgrade'.

2017/04/12 14:59:23 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeededAfter the complete clusterware on first node is updated by clusterwide active version still remains the 11.2.0.4

[root@rhel6m1 grid]# crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [11.2.0.4.0]

[root@rhel6m1 grid]# crsctl query crs releaseversion

Oracle High Availability Services release version on the local node is [12.2.0.1.0]

[root@rhel6m1 grid]# crsctl query crs softwareversion -all

Oracle Clusterware version on node [rhel6m1] is [12.2.0.1.0]

Oracle Clusterware version on node [rhel6m2] is [11.2.0.4.0]

Following output shows the rootupgrade run on second node.

[root@rhel6m2 ~]# /opt/app/12.2.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /opt/app/12.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /opt/app/12.2.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/opt/app/oracle/crsdata/rhel6m2/crsconfig/rootcrs_rhel6m2_2017-04-12_03-00-25PM.log

2017/04/12 15:00:30 CLSRSC-595: Executing upgrade step 1 of 19: 'UpgradeTFA'.

2017/04/12 15:00:30 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector.

2017/04/12 15:02:06 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector.

2017/04/12 15:02:06 CLSRSC-595: Executing upgrade step 2 of 19: 'ValidateEnv'.

2017/04/12 15:02:08 CLSRSC-595: Executing upgrade step 3 of 19: 'GenSiteGUIDs'.

2017/04/12 15:02:09 CLSRSC-595: Executing upgrade step 4 of 19: 'GetOldConfig'.

2017/04/12 15:02:09 CLSRSC-464: Starting retrieval of the cluster configuration data

2017/04/12 15:02:25 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed.

2017/04/12 15:02:25 CLSRSC-595: Executing upgrade step 5 of 19: 'UpgPrechecks'.

2017/04/12 15:02:26 CLSRSC-363: User ignored prerequisites during installation

2017/04/12 15:02:29 CLSRSC-595: Executing upgrade step 6 of 19: 'SaveParamFile'.

2017/04/12 15:02:33 CLSRSC-595: Executing upgrade step 7 of 19: 'SetupOSD'.

2017/04/12 15:02:35 CLSRSC-595: Executing upgrade step 8 of 19: 'PreUpgrade'.

ASM configuration upgraded in local node successfully.

2017/04/12 15:02:42 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack

2017/04/12 15:06:05 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed.

2017/04/12 15:06:11 CLSRSC-595: Executing upgrade step 9 of 19: 'CheckCRSConfig'.

2017/04/12 15:06:12 CLSRSC-595: Executing upgrade step 10 of 19: 'UpgradeOLR'.

2017/04/12 15:06:14 CLSRSC-595: Executing upgrade step 11 of 19: 'ConfigCHMOS'.

2017/04/12 15:06:14 CLSRSC-595: Executing upgrade step 12 of 19: 'InstallAFD'.

2017/04/12 15:06:15 CLSRSC-595: Executing upgrade step 13 of 19: 'createOHASD'.

2017/04/12 15:06:18 CLSRSC-595: Executing upgrade step 14 of 19: 'ConfigOHASD'.

2017/04/12 15:06:33 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.conf'

2017/04/12 15:06:55 CLSRSC-595: Executing upgrade step 15 of 19: 'InstallACFS'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel6m2'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel6m2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2017/04/12 15:07:40 CLSRSC-595: Executing upgrade step 16 of 19: 'InstallKA'.

2017/04/12 15:07:41 CLSRSC-595: Executing upgrade step 17 of 19: 'UpgradeCluster'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel6m2'

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel6m2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

2017/04/12 15:08:39 CLSRSC-343: Successfully started Oracle Clusterware stack

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 12c Release 2.

Successfully taken the backup of node specific configuration in OCR.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2017/04/12 15:09:02 CLSRSC-595: Executing upgrade step 18 of 19: 'UpgradeNode'.

Start upgrade invoked..

2017/04/12 15:09:36 CLSRSC-478: Setting Oracle Clusterware active version on the last node to be upgraded

2017/04/12 15:09:36 CLSRSC-482: Running command: '/opt/app/12.2.0/grid/bin/crsctl set crs activeversion'

Started to upgrade the active version of Oracle Clusterware. This operation may take a few minutes.

Started to upgrade the OCR.

Started to upgrade CSS.

CSS was successfully upgraded.

Started to upgrade Oracle ASM.

Started to upgrade CRS.

CRS was successfully upgraded.

Successfully upgraded the active version of Oracle Clusterware.

Oracle Clusterware active version was successfully set to 12.2.0.1.0.

2017/04/12 15:11:43 CLSRSC-479: Successfully set Oracle Clusterware active version

2017/04/12 15:11:55 CLSRSC-476: Finishing upgrade of resource types

2017/04/12 15:13:12 CLSRSC-482: Running command: 'srvctl upgrade model -s 11.2.0.4.0 -d 12.2.0.1.0 -p last'

2017/04/12 15:13:12 CLSRSC-477: Successfully completed upgrade of resource types

2017/04/12 15:14:00 CLSRSC-595: Executing upgrade step 19 of 19: 'PostUpgrade'.

2017/04/12 15:14:24 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeededAfter the second (in this case last node) is upgraded the active version is upgraded to 12.2.0.1

[grid@rhel6m2 ~]$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [12.2.0.1.0]

[grid@rhel6m2 ~]$ crsctl query crs releaseversion

Oracle High Availability Services release version on the local node is [12.2.0.1.0]

[grid@rhel6m2 ~]$ crsctl query crs softwareversion -all

Oracle Clusterware version on node [rhel6m1] is [12.2.0.1.0]

Oracle Clusterware version on node [rhel6m2] is [12.2.0.1.0]

10. After the root scripts are run the upgrade process complete any remaining tasks and concludes.

11. At this time the GI is upgraded to 12.2. The cluster mode would be listed as flex (which could be veriried through crsctl or asmcmd)

[grid@rhel6m1 ~]$ crsctl get cluster mode status

Cluster is running in "flex" mode

ASMCMD> showclustermode

ASM cluster : Flex mode enabled

As stated earlier, even though cluster is a flex cluster there's no GNS setup

[grid@rhel6m1 ~]$ srvctl config gns

PRKF-1110 : Neither GNS server nor GNS client is configured on this cluster

All the nodes have the "hub" role

[grid@rhel6m1 ~]$ crsctl get node role config -all

Node 'rhel6m1' configured role is 'hub'

Node 'rhel6m2' configured role is 'hub'

12. If as part of the upgrade OCR and Vote disks were moved to a external redundnacy disk group, move them back to normal redundnacy diskgroup.

[root@rhel6m1 app]# ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 4

Total space (kbytes) : 409568

Used space (kbytes) : 12056

Available space (kbytes) : 397512

ID : 1487892601

Device/File Name : +GIMR

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check succeeded

[root@rhel6m1 app]# ocrconfig -add +CLUSTER_DG

[root@rhel6m1 app]# ocrconfig -delete +GIMR

[grid@rhel6m1 app]$ crsctl replace votedisk +CLUSTER_DG

Successful addition of voting disk ddf6f87af94d4f2bbf8a1c5597b2f6fd.

Successful addition of voting disk 152250cb7ef94f3abf1afebc489071fa.

Successful addition of voting disk 87eeffff539b4f9ebf0a5d1932fca9b4.

Successful deletion of voting disk 7917ab02c3e54f5cbf1453f6ba7b1bc7.

Successfully replaced voting disk group with +CLUSTER_DG.

CRS-4266: Voting file(s) successfully replaced

[grid@rhel6m1 app]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE ddf6f87af94d4f2bbf8a1c5597b2f6fd (/dev/sdc1) [CLUSTER_DG]

2. ONLINE 152250cb7ef94f3abf1afebc489071fa (/dev/sdd1) [CLUSTER_DG]

3. ONLINE 87eeffff539b4f9ebf0a5d1932fca9b4 (/dev/sdb1) [CLUSTER_DG]

Located 3 voting disk(s).After this the disk group where MGMTDB is located will be used as the location for OCR backups.

[root@rhel6m1 app]# ocrconfig -showbackuploc

The Oracle Cluster Registry backup location is [+GIMR]

ocrconfig -showbackup manual

rhel6m2 2017/07/14 11:20:31 +GIMR:/rhel6m-cluster/OCRBACKUP/backup_20170714_112031.ocr.289.949317631 0

rhel6m2 2017/07/13 17:34:43 +GIMR:/rhel6m-cluster/OCRBACKUP/backup_20170713_173443.ocr.258.949253683 0

13. Size the manage repository size based on retention and number of nodes

oclumon manage -repos changerepossize 4000

14. Finally run the cluvfy post crsinst

[grid@rhel6m1 ~]$ cluvfy stage -post crsinst -n all

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.1.0" ...PASSED

Verifying subnet mask consistency for subnet "192.168.0.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast check ...PASSED

Verifying ASM filter driver configuration consistency ...PASSED

Verifying Time zone consistency ...PASSED

Verifying Cluster Manager Integrity ...PASSED

Verifying User Mask ...PASSED

Verifying Cluster Integrity ...PASSED

Verifying OCR Integrity ...PASSED

Verifying CRS Integrity ...

Verifying Clusterware Version Consistency ...PASSED

Verifying CRS Integrity ...PASSED

Verifying Node Application Existence ...PASSED

Verifying Single Client Access Name (SCAN) ...

Verifying DNS/NIS name service 'rac-scan.domain.net' ...

Verifying Name Service Switch Configuration File Integrity ...PASSED

Verifying DNS/NIS name service 'rac-scan.domain.net' ...PASSED

Verifying Single Client Access Name (SCAN) ...PASSED

Verifying OLR Integrity ...PASSED

Verifying Voting Disk ...PASSED

Verifying ASM Integrity ...

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.1.0" ...PASSED

Verifying subnet mask consistency for subnet "192.168.0.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying ASM Integrity ...PASSED

Verifying Device Checks for ASM ...

Verifying Access Control List check ...PASSED

Verifying Device Checks for ASM ...PASSED

Verifying ASM disk group free space ...PASSED

Verifying I/O scheduler ...

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying I/O scheduler ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Clock Synchronization ...PASSED

Verifying VIP Subnet configuration check ...PASSED

Verifying Network configuration consistency checks ...PASSED

Verifying File system mount options for path GI_HOME ...PASSED

Post-check for cluster services setup was successful.

CVU operation performed: stage -post crsinst

Date: Apr 13, 2017 2:06:17 PM

CVU home: /opt/app/12.2.0/grid/

User: gridRelated Posts

Upgrading RAC from 11.2.0.4 to 12.1.0.2 - Grid Infrastructure

Upgrading Grid Infrastructure Used for Single Instance from 11.2.0.4 to 12.1.0.2

Upgrading RAC from 12.1.0.1 to 12.1.0.2 - Grid Infrastructure

Upgrading 12c CDB and PDB from 12.1.0.1 to 12.1.0.2

Upgrading from 11gR2 (11.2.0.3) to 12c (12.1.0.1) Grid Infrastructure

Upgrade Oracle Database 12c1 from 12.1.0.1 to 12.1.0.2

Upgrading RAC from 11.2.0.3 to 11.2.0.4 - Grid Infrastructure

Upgrading from 10.2.0.4 to 10.2.0.5 (Clusterware, RAC, ASM)

Upgrade from 10.2.0.5 to 11.2.0.3 (Clusterware, RAC, ASM)

Upgrade from 11.1.0.7 to 11.2.0.3 (Clusterware, ASM & RAC)

Upgrade from 11.1.0.7 to 11.2.0.4 (Clusterware, ASM & RAC)

Upgrading from 11.1.0.7 to 11.2.0.3 with Transient Logical Standby

Upgrading from 11.2.0.1 to 11.2.0.3 with in-place upgrade for RAC

In-place upgrade from 11.2.0.2 to 11.2.0.3

Upgrading from 11.2.0.2 to 11.2.0.3 with Physical Standby - 1

Upgrading from 11.2.0.2 to 11.2.0.3 with Physical Standby - 2

Upgrading from 11gR2 (11.2.0.3) to 12c (12.1.0.1) Grid Infrastructure