Until 12.2 the GI didn't have a specialist option for installing a extended cluster. Once the hardware is setup in a transparent way the GI was installed as one would in a standard cluster where all nodes reside on the same location. But with 12.2 a new option was introduced which allowed to specially select the extended cluster option. This meant GI is now aware of the extended cluster setup.

The blog post shows steps of installing a extended cluster using 19.3 GI.

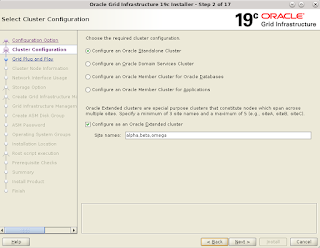

To configure an extended cluster, select the cluster configuration and then select the option to configure as an extended cluster. On extended cluster each node would be associated with a site. However an additional quorum site must also be specified in order to proceed with the configuration. In this case alpha and beta are the sites where the cluster nodes will reside. But specifying only those sites will give an error. As per the error message shown it seems extend cluster can have up to 5 sites. So in total 3 sites are specified. Alpha and Beta for nodes and Omega for the quorum site. The quorum sites could be just a storage only site (no Oracle GI is installed here) that is in equal distance to other two sites. Oracle even allows NFS to be used for the quorum site.

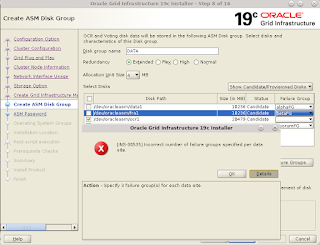

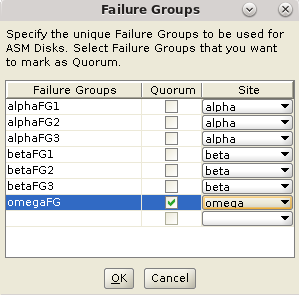

Nothing special need to be done for the SCAN setup compared to a standard cluster. As mentioned above each node in an extended cluster configuration will be assigned a site. In this case each node is assigned to sites alpha and beta. Setup the public and private inter-connect network interfaces. In an extended cluster configuration the private interconnect speeds could restrict the distance between sites so that performance doesn't degrade beyond acceptable level. Choose ASM for storage, as ASM it provides host based mirroring. In this setup the GIMR installation was not configured. To store OCR and vote disk in ASM, the ASM diskgroup must be of extended, high, normal or flex redundancy type. External redundancy is not an option in an extended cluster. Oracle recommends using extended redundancy for the this disk group. Extended redundancy would require three failure groups per site in addition to a quorum site. A lesser number of failure groups would not allow to proceed. To create failure groups select specify failure groups button. Define three failure groups per site and mark the quorum site failure group by selecting it. When specifying disk for the diskgroup allocate the respective failure group. Below is the summary of the GI setup. It list the sites on the extended cluster and each node associated with it. When prompted run the root scripts. There's nothing on the root script output to indicate an extended cluster setup. Root script output from site alpha node rhel71.

# /opt/app/19.x.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /opt/app/19.x.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /opt/app/19.x.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/opt/app/oracle/crsdata/rhel71/crsconfig/rootcrs_rhel71_2019-08-21_08-36-05AM.log

2019/08/21 08:36:31 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/08/21 08:36:31 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/08/21 08:36:31 CLSRSC-363: User ignored prerequisites during installation

2019/08/21 08:36:31 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/08/21 08:36:37 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/08/21 08:36:40 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2019/08/21 08:36:40 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2019/08/21 08:36:41 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2019/08/21 08:37:41 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/08/21 08:37:42 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2019/08/21 08:37:52 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/08/21 08:38:21 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/08/21 08:38:21 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/08/21 08:38:35 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/08/21 08:38:36 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/08/21 08:39:59 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/08/21 08:40:14 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2019/08/21 08:41:55 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/08/21 08:42:10 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

ASM has been created and started successfully.

[DBT-30001] Disk groups created successfully. Check /opt/app/oracle/cfgtoollogs/asmca/asmca-190821AM084259.log for details.

2019/08/21 08:44:36 CLSRSC-482: Running command: '/opt/app/19.x.0/grid/bin/ocrconfig -upgrade oracle oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk a06f85aec09b4fa4bf90ce54a22f9ad4.

Successful addition of voting disk d335f6377ec04f96bfca7598c7fa632b.

Successful addition of voting disk d977e270ea0c4f6cbffd9b3c705aff33.

Successful addition of voting disk 1f89e026ce3b4f43bfffba60ceca2e64.

Successful addition of voting disk b8f67fe289bc4f5fbfdac97c76844452.

Successful addition of voting disk 9092fa74e0a34ff6bfae1bfa43c0f6b0.

Successful addition of voting disk 245d2ef7d3384f13bf39dc54343bcc91.

Successfully replaced voting disk group with +OCRDG.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE a06f85aec09b4fa4bf90ce54a22f9ad4 (/dev/oracleasm/quo1) [OCRDG]

2. ONLINE d335f6377ec04f96bfca7598c7fa632b (/dev/oracleasm/bocr3) [OCRDG]

3. ONLINE d977e270ea0c4f6cbffd9b3c705aff33 (/dev/oracleasm/bocr1) [OCRDG]

4. ONLINE 1f89e026ce3b4f43bfffba60ceca2e64 (/dev/oracleasm/bocr2) [OCRDG]

5. ONLINE b8f67fe289bc4f5fbfdac97c76844452 (/dev/oracleasm/aocr3) [OCRDG]

6. ONLINE 9092fa74e0a34ff6bfae1bfa43c0f6b0 (/dev/oracleasm/aocr1) [OCRDG]

7. ONLINE 245d2ef7d3384f13bf39dc54343bcc91 (/dev/oracleasm/aocr2) [OCRDG]

Located 7 voting disk(s).

2019/08/21 08:47:50 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2019/08/21 08:49:23 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/08/21 08:49:24 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2019/08/21 08:53:20 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/08/21 08:54:26 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded# /opt/app/19.x.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /opt/app/19.x.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /opt/app/19.x.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/opt/app/oracle/crsdata/rhel72/crsconfig/rootcrs_rhel72_2019-08-21_08-55-15AM.log

2019/08/21 08:55:33 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/08/21 08:55:33 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/08/21 08:55:33 CLSRSC-363: User ignored prerequisites during installation

2019/08/21 08:55:33 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/08/21 08:55:36 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/08/21 08:55:37 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2019/08/21 08:55:37 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2019/08/21 08:55:40 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2019/08/21 08:55:43 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2019/08/21 08:55:43 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/08/21 08:55:58 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/08/21 08:55:58 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/08/21 08:56:02 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/08/21 08:56:03 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/08/21 08:56:36 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/08/21 08:57:18 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/08/21 08:57:22 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2019/08/21 08:58:55 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/08/21 08:58:59 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2019/08/21 08:59:20 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2019/08/21 09:00:35 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/08/21 09:00:36 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2019/08/21 09:01:08 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/08/21 09:01:25 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeededAt the end of the setup check if the cluster is extended.

$ crsctl get cluster mode status Cluster is running in "flex" mode $ crsctl get cluster extended CRS-6577: The cluster is extended.Query all cluster sites

$ crsctl query cluster site -all Site 'alpha' identified by GUID '0dc406e8d13acf67bf0fc86402d1573a' in state 'ENABLED' contains nodes 'rhel71' and disks 'OCRDG_0004(OCRDG),OCRDG_0005(OCRDG),OCRDG_0006(OCRDG)'. Site 'beta' identified by GUID 'fdafc618b7bc4f9eff995d061d05be6f' in state 'ENABLED' contains nodes 'rhel72' and disks 'OCRDG_0001(OCRDG),OCRDG_0002(OCRDG),OCRDG_0003(OCRDG)'. Site 'omega' identified by GUID '1884d0cc77367fdabfa889ad2efb84dc' in state 'ENABLED' contains disks 'OCRDG_0000(OCRDG)' and no nodes .

crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE a06f85aec09b4fa4bf90ce54a22f9ad4 (/dev/oracleasm/quo1) [OCRDG] 2. ONLINE d335f6377ec04f96bfca7598c7fa632b (/dev/oracleasm/bocr3) [OCRDG] 3. ONLINE d977e270ea0c4f6cbffd9b3c705aff33 (/dev/oracleasm/bocr1) [OCRDG] 4. ONLINE 1f89e026ce3b4f43bfffba60ceca2e64 (/dev/oracleasm/bocr2) [OCRDG] 5. ONLINE b8f67fe289bc4f5fbfdac97c76844452 (/dev/oracleasm/aocr3) [OCRDG] 6. ONLINE 9092fa74e0a34ff6bfae1bfa43c0f6b0 (/dev/oracleasm/aocr1) [OCRDG] 7. ONLINE 245d2ef7d3384f13bf39dc54343bcc91 (/dev/oracleasm/aocr2) [OCRDG] Located 7 voting disk(s).Even though for ASM diskgroup where OCR and vote disk were stored was created using extended redundancy it is possible to create a normal redundancy disk group specifying disks for each site. Once the scenarios where oracle suggest the use of normal redundancy is in extended cluster configuration. Run asmca and select normal redundancy for the disk group and specify disk from each site.

crsctl query cluster site -all Site 'alpha' identified by GUID '0dc406e8d13acf67bf0fc86402d1573a' in state 'ENABLED' contains nodes 'rhel71' and disks 'DATA_0000(DATA),FRA_0000(FRA),OCRDG_0004(OCRDG),OCRDG_0005(OCRDG),OCRDG_0006(OCRDG)'. Site 'beta' identified by GUID 'fdafc618b7bc4f9eff995d061d05be6f' in state 'ENABLED' contains nodes 'rhel72' and disks 'DATA_0001(DATA),FRA_0001(FRA),OCRDG_0001(OCRDG),OCRDG_0002(OCRDG),OCRDG_0003(OCRDG)'. Site 'omega' identified by GUID '1884d0cc77367fdabfa889ad2efb84dc' in state 'ENABLED' contains disks 'OCRDG_0000(OCRDG)' and no nodes .

Another point of concern is preferred read option for ASM diskgroup. It seems for extended cluster this is enable by default

INST_ID DISKGROUP_ ATTRIBUTE_NAME VALUE

---------- ---------- ------------------------------ ----------

1 DATA preferred_read.enabled TRUE

1 FRA preferred_read.enabled TRUE

1 OCRDG preferred_read.enabled TRUE

2 DATA preferred_read.enabled TRUE

2 FRA preferred_read.enabled TRUE

2 OCRDG preferred_read.enabled TRUEAs per Oracle doc "In an Oracle extended cluster, which contains nodes that span multiple physically separated sites, the PREFERRED_READ.ENABLED disk group attribute controls whether preferred read functionality is enabled for a disk group. If preferred read functionality is enabled, then this functionality enables an instance to determine and read from disks at the same site as itself, which can improve performance".Also is seems setting ASM_PREFERRED_READ_FAILURE_GROUPS is availble for backward compatibility. Following from oracle doc "Whether or not PREFERRED_READ.ENABLED has been enabled, preferred read can be set at the failure group level on an Oracle ASM instance or a client instance in a cluster with the ASM_PREFERRED_READ_FAILURE_GROUPS initialization parameter, which is available for backward compatibility".

Following shows that each ASM instances has set the perferred read disk that is local to the site.

SQL> select inst_id,site_name,name,failgroup,preferred_read,path from gv$asm_disk order by 1,2,3,4;

INST_ID SITE_NAME NAME FAILGROUP P PATH

---------- ------------------------------ ------------------------------ ------------------------------ - ----------------------------------------

1 alpha DATA_0000 DATA_0000 Y /dev/oracleasm/adata1

alpha FRA_0000 FRA_0000 Y /dev/oracleasm/afra1

alpha OCRDG_0004 ALPHAFG3 Y /dev/oracleasm/aocr3

alpha OCRDG_0005 ALPHAFG2 Y /dev/oracleasm/aocr2

alpha OCRDG_0006 ALPHAFG1 Y /dev/oracleasm/aocr1

beta DATA_0001 DATA_0001 N /dev/oracleasm/bdata1

beta FRA_0001 FRA_0001 N /dev/oracleasm/bfra1

beta OCRDG_0001 BETAFG3 N /dev/oracleasm/bocr3

beta OCRDG_0002 BETAFG2 N /dev/oracleasm/bocr2

beta OCRDG_0003 BETAFG1 N /dev/oracleasm/bocr1

omega OCRDG_0000 OMEGAFG N /dev/oracleasm/quo1

INST_ID SITE_NAME NAME FAILGROUP P PATH

---------- ------------------------------ ------------------------------ ------------------------------ - ----------------------------------------

2 alpha DATA_0000 DATA_0000 N /dev/oracleasm/adata1

alpha FRA_0000 FRA_0000 N /dev/oracleasm/afra1

alpha OCRDG_0004 ALPHAFG3 N /dev/oracleasm/aocr3

alpha OCRDG_0005 ALPHAFG2 N /dev/oracleasm/aocr2

alpha OCRDG_0006 ALPHAFG1 N /dev/oracleasm/aocr1

beta DATA_0001 DATA_0001 Y /dev/oracleasm/bdata1

beta FRA_0001 FRA_0001 Y /dev/oracleasm/bfra1

beta OCRDG_0001 BETAFG3 Y /dev/oracleasm/bocr3

beta OCRDG_0002 BETAFG2 Y /dev/oracleasm/bocr2

beta OCRDG_0003 BETAFG1 Y /dev/oracleasm/bocr1

omega OCRDG_0000 OMEGAFG N /dev/oracleasm/quo1There was no speical setup for installing and creating a database on the extendd cluster. Steps for these were same as installing a standard 19c cluster.

Related Posts

Installing 19c (19.3) RAC on RHEL 7 Using Response File

Installing 18c (18.3) RAC on RHEL 7 with Role Separation - Clusterware

Installing 12cR2 (12.2.0.1) RAC on RHEL 6 with Role Separation - Clusterware

Installing 12c (12.1.0.2) Flex Cluster on RHEL 6 with Role Separation

Installing 12c (12.1.0.1) RAC on RHEL 6 with Role Separation - Clusterware

Installing 11gR2 (11.2.0.3) GI with Role Separation on RHEL 6

Installing 11gR2 (11.2.0.3) GI with Role Separation on OEL 6

Installing 11gR2 Standalone Server with ASM and Role Separation on RHEL 6

11gR2 Standalone Data Guard (with ASM and Role Separation)