Looking at the breakdown of the wait events for the SQL it was seen that majority of waits were related to parallel query.

Beside the parallel query related wait events one other thing that stood out was the result cache contention. There has been a previous experience with result cache contention that manifested as a latch free event. However in this case latch free wasn't the only prominent wait event. Strange thing was that query was not suppose to use parallelism when executing. None of the underlying table used had degree greater than 1 (None of the reasons mentioned in 196938.1 were there). Parallel query related parameters were set at default.

NAME VALUE ---------------------------------------- ---------- parallel_server TRUE parallel_server_instances 3 parallel_min_percent 0 parallel_min_servers 0 parallel_max_servers 960 parallel_instance_group parallel_execution_message_size 16384 parallel_degree_policy MANUAL parallel_adaptive_multi_user TRUE parallel_threads_per_cpu 2 parallel_automatic_tuning FALSE parallel_io_cap_enabled FALSE parallel_min_time_threshold AUTO parallel_degree_limit CPU parallel_force_local FALSE parallel_servers_target 384parallel_degree_policy was introduced on 11.2 which "specifies whether or not automatic degree of Parallelism, statement queuing, and in-memory parallel execution will be enabled". Setting this to manual disable this feature and behavior is same as 11.1. Application user session did have pq_status enabled (1289785.1) and setting parallel_max_servers to 0 disabled it (alter session disable parallel query wasn't an option in this case) but still didn't eliminate the parallel query related waits.

Another thing noticed was that only some of the session ran in parallel while others did not. In the above image session 2687 ran in parallel (indicated by QC SID column) while other session did not run in parallel. This session details show parallel query, result cache wait events.

Result cache contention was understandable since the query did employ a function on it's select clause which had result cache enabled. (eg. select myfunc(column_id), col2, col3...., result cache was enabled on "myfunc" function). But how the parallel query fit in wasn't clear and there was a clear increase of the parallel query related wait events since this deployment. 1,2 and 3 are RAC instance IDs

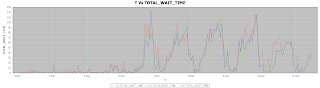

Latch free waits and wait times

enq: PS - contention waits and wait times

enq: RC - Result Cache: Contention waits and wait times

Reliable message waits and wait times

Although the wait time values could be considered low, when compared to the situation before where these were near 0, this is a significant increase.

Further monitoring showed that beside this particular SQL no other application SQL was using parallelism except for the following non application SQL.

Looking at the SQL, this is clearly related to result cache access. There was no information available on the oracle reference documentation on this view (it could be an internal view not for end users). Could only guess what is used for, where clause provides a good clue ,instance id and key, possible result cache key. Querying the execution plan of this shows parallel query being used

SQL> select * from table(dbms_xplan.display_CURSOR('8sd1v66dnj4m4'));

SQL_ID 8sd1v66dnj4m4, child number 0

-------------------------------------

select inst_id, blk_num, blk_seq, blk_dat from gv$result_cache_rd where

inst_id = :1 and key = :2

Plan hash value: 238457506

--------------------------------------------------------------------------------------

| Id | Operation | Name | TQ |IN-OUT| PQ Distrib |

--------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | |

|* 1 | PX COORDINATOR | | | | |

| 2 | PX SEND QC (RANDOM) | :TQ10000 | Q1,00 | P->S | QC (RAND) |

|* 3 | VIEW | GV$RESULT_CACHE_RD | Q1,00 | PCWP | |

|* 4 | FIXED TABLE FIXED INDEX| X$QESRCRD (ind:1) | Q1,00 | PCWP | |

--------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter(("KEY"=:2 AND "INST_ID"=:1))

3 - filter(("KEY"=:2 AND "INST_ID"=:1))

4 - filter("QESRCRD_KEY"=:2)

Note

-----

- rule based optimizer used (consider using cbo)So could it be that when a business SQL result in a cache hit, the results are searched in the cache using parallel SQL? Hard to say without exact information on what gv$result_cache_rd is used for. But it's clear that parallel query related waits were caused by this SQL. To test this result cache was removed from the function used in the select clause. This resulted in drop in the parallel query related wait events (20 Dec mark).latch free waits and wait times

enq: PS - contention waits and wait times

enq: RC - Result Cache: Contention waits and wait times

Reliable message waits and wait times

This confirms that parallel query related waits were due to the result cache access. Removing the result cache from the function also made the average elapsed time for the SQL stable compared to when having result cache.

Update 02 January 2014

Further test has shown the V$RESULT_CACHE_RD comes into in RAC system when a instance request missing result from a result cache of a remote node. Following test case could used to confirm this.

create table x (a number, b number);

create table y (c number, d number);

begin

for i in 1 .. 10

loop

insert into x values(i,round(dbms_random.value(1,10)));

end loop;

end;

/

begin

for i in 25 .. 45

loop

insert into y values(i,mod(i,10));

end loop;

end;

/

create or replace function myfunc(inum in number) return number

result_cache

as retnum number;

begin

select b into retnum from x where a=inum;

return retnum;

end;

/It is best if the database could be restarted if not shared pool cleared (as such not good to test on a production system). Assuming this is a two node RAC run the following on both nodesSQL> set line 180

SQL> set pagesize 0

SQL> select * from table(dbms_xplan.display_CURSOR('8sd1v66dnj4m4'));

SQL_ID: 8sd1v66dnj4m4, child number: 0 cannot be found Since the sql text is Oracle provided it could be assumed that SQL ID (which was identified earlier) will remain the same. This shows that SQL does not exits in the library cache (if DB was restarted, this means it never got executed, assuming not been paged out)Now on node 1 run the following SQL (output has been truncated)

SQL> select c,myfunc(d) from y;

25 3

26 4

27 1

28 2Run the first SQL again and it still gives a negative resultsSQL> select * from table(dbms_xplan.display_CURSOR('8sd1v66dnj4m4'));

SQL_ID: 8sd1v66dnj4m4, child number: 0 cannot be foundParallel result cache access hasn't occurred yet. On the same instance the application SQL could be run many times and SQL (8sd1v66dnj4m4) will not get executed.Now run the application SQL on the node 2.

SQL> select c,myfunc(d) from y;

25 3

26 4

27 1

28 2This time the parallel result cache access query has been executedSQL> select * from table(dbms_xplan.display_CURSOR('8sd1v66dnj4m4'));

SQL_ID 8sd1v66dnj4m4, child number 0

-------------------------------------

select inst_id, blk_num, blk_seq, blk_dat from gv$result_cache_rd where

inst_id = :1 and key = :2

Plan hash value: 238457506

--------------------------------------------------------------------------------------

| Id | Operation | Name | TQ |IN-OUT| PQ Distrib |

--------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | |

|* 1 | PX COORDINATOR | | | | |

| 2 | PX SEND QC (RANDOM) | :TQ10000 | Q1,00 | P->S | QC (RAND) |

|* 3 | VIEW | GV$RESULT_CACHE_RD | Q1,00 | PCWP | |

|* 4 | FIXED TABLE FIXED INDEX| X$QESRCRD (ind:1) | Q1,00 | PCWP | |

--------------------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter(("KEY"=:2 AND "INST_ID"=:1))

3 - filter(("KEY"=:2 AND "INST_ID"=:1))

4 - filter("QESRCRD_KEY"=:2)

Note

-----

- rule based optimizer used (consider using cbo)

28 rows selected.This will now be present on the library cache of both instances. This confirms inter instance result cache access is done using parallel query even though database is configured not to use parallel query.Read Method 3 on this article for explanation on how result cache behave in a 11.2 RAC environment.