The RAC setup in this case is a 2 node RAC and node named rhel72 will be removed from the cluster. The database is a CDB which has a single PDB.

SQL> select instance_number,instance_name,host_name from gv$instance;

INSTANCE_NUMBER INSTANCE_NAME HOST_NAME

--------------- ---------------- --------------------

1 ent18c1 rhel71.domain.net

2 ent18c2 rhel72.domain.net #<-- node to be removed

SQL> select inst_id,con_id,name from gv$pdbs order by 1;

INST_ID CON_ID NAME

---------- ---------- --------------

1 2 PDB$SEED

1 3 PDB18C

2 2 PDB$SEED

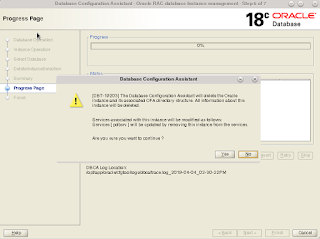

2 3 PDB18C1. First phase include removing the database instance from the node to be deleted. For this run the DBCA on any node except on the node that has the instance being deleted. In this case DBCA is run from node rhel71. Follow the instance management option to remove the instance.Select RAC database instance management and delete instance options. Following message is shown with regard to the service associated with the PDB running on the instance being deleted. DBCA will modify the service to include only the remaining instances.

2. At the end of the DBCA run the database instance is removed from the node to be deleted

SQL> select instance_number,instance_name,host_name from gv$instance;

INSTANCE_NUMBER INSTANCE_NAME HOST_NAME

--------------- ---------------- -------------------

1 ent18c1 rhel71.domain.net

SQL> select inst_id,con_id,name from gv$pdbs order by 1;

INST_ID CON_ID NAME

---------- ---------- --------------

1 2 PDB$SEED

1 3 PDB18C Oracle RAC configuration is updated to reflect the change instances along with the database service.srvctl config database -db ent18c Database unique name: ent18c Database name: ent18c Oracle home: /opt/app/oracle/product/18.x.0/dbhome_1 Oracle user: oracle Spfile: +DATA/ENT18C/PARAMETERFILE/spfile.272.1003495623 Password file: +DATA/ENT18C/PASSWORD/pwdent18c.256.1003490291 Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: Disk Groups: DATA,FRA Mount point paths: Services: pdbsrv Type: RAC Start concurrency: Stop concurrency: OSDBA group: dba OSOPER group: oper Database instances: ent18c1 Configured nodes: rhel71 CSS critical: no CPU count: 0 Memory target: 0 Maximum memory: 0 Default network number for database services: Database is administrator managed srvctl config service -db ent18c -service pdbsrv Service name: pdbsrv Server pool: Cardinality: 1 Service role: PRIMARY Management policy: AUTOMATIC DTP transaction: false AQ HA notifications: false Global: false Commit Outcome: false Failover type: Failover method: Failover retries: Failover delay: Failover restore: NONE Connection Load Balancing Goal: LONG Runtime Load Balancing Goal: NONE TAF policy specification: NONE Edition: Pluggable database name: pdb18c Hub service: Maximum lag time: ANY SQL Translation Profile: Retention: 86400 seconds Replay Initiation Time: 300 seconds Drain timeout: Stop option: Session State Consistency: DYNAMIC GSM Flags: 0 Service is enabled Preferred instances: ent18c1 Available instances: CSS critical: no3. Check if redo log threads for the deleted instance is removed from the database. If not remove database thread associated with the deleted instance.

SQL> select inst_id,group#,thread# from gv$log;

INST_ID GROUP# THREAD#

---------- ---------- ----------

1 1 1

1 2 14. Once the instance removal is complete next step is to remove the database software. On 18c there's a difference to removing a node compared 12cR1. Updating the inventory file is not needed to remove the database software. Simply execute the deinstall with the local option on the node to be deleted. It's important to include the local option, without it the oracle database software on all nodes will get uninstalled.cd /opt/app/oracle/product/18.x.0/dbhome_1/deinstall/ ./deinstall -local

Checking for required files and bootstrapping ... Please wait ... Location of logs /opt/app/oraInventory/logs/ ############ ORACLE DECONFIG TOOL START ############ ######################### DECONFIG CHECK OPERATION START ######################### ## [START] Install check configuration ## Checking for existence of the Oracle home location /opt/app/oracle/product/18.x.0/dbhome_1 Oracle Home type selected for deinstall is: Oracle Real Application Cluster Database Oracle Base selected for deinstall is: /opt/app/oracle Checking for existence of central inventory location /opt/app/oraInventory Checking for existence of the Oracle Grid Infrastructure home /opt/app/18.x.0/grid The following nodes are part of this cluster: rhel72,rhel71 Checking for sufficient temp space availability on node(s) : 'rhel72' ## [END] Install check configuration ## Network Configuration check config START Network de-configuration trace file location: /opt/app/oraInventory/logs/netdc_check2019-04-04_02-52-54PM.log Network Configuration check config END Database Check Configuration START Database de-configuration trace file location: /opt/app/oraInventory/logs/databasedc_check2019-04-04_02-52-54PM.log Use comma as separator when specifying list of values as input Specify the list of database names that are configured locally on this node for this Oracle home. Local configurations of the discovered databases will be removed [ent18c2]: Database Check Configuration END ######################### DECONFIG CHECK OPERATION END ######################### ####################### DECONFIG CHECK OPERATION SUMMARY ####################### Oracle Grid Infrastructure Home is: /opt/app/18.x.0/grid The following nodes are part of this cluster: rhel72,rhel71 The cluster node(s) on which the Oracle home deinstallation will be performed are:rhel72 Oracle Home selected for deinstall is: /opt/app/oracle/product/18.x.0/dbhome_1 Inventory Location where the Oracle home registered is: /opt/app/oraInventory The option -local will not modify any database configuration for this Oracle home. Do you want to continue (y - yes, n - no)? [n]: y A log of this session will be written to: '/opt/app/oraInventory/logs/deinstall_deconfig2019-04-04_02-51-47-PM.out' Any error messages from this session will be written to: '/opt/app/oraInventory/logs/deinstall_deconfig2019-04-04_02-51-47-PM.err' ######################## DECONFIG CLEAN OPERATION START ######################## Database de-configuration trace file location: /opt/app/oraInventory/logs/databasedc_clean2019-04-04_02-52-54PM.log Network Configuration clean config START Network de-configuration trace file location: /opt/app/oraInventory/logs/netdc_clean2019-04-04_02-52-54PM.log Network Configuration clean config END ######################### DECONFIG CLEAN OPERATION END ######################### ####################### DECONFIG CLEAN OPERATION SUMMARY ####################### ####################################################################### ############# ORACLE DECONFIG TOOL END ############# Using properties file /tmp/deinstall2019-04-04_02-50-37PM/response/deinstall_2019-04-04_02-51-47-PM.rsp Location of logs /opt/app/oraInventory/logs/ ############ ORACLE DEINSTALL TOOL START ############ ####################### DEINSTALL CHECK OPERATION SUMMARY ####################### A log of this session will be written to: '/opt/app/oraInventory/logs/deinstall_deconfig2019-04-04_02-51-47-PM.out' Any error messages from this session will be written to: '/opt/app/oraInventory/logs/deinstall_deconfig2019-04-04_02-51-47-PM.err' ######################## DEINSTALL CLEAN OPERATION START ######################## ## [START] Preparing for Deinstall ## Setting LOCAL_NODE to rhel72 Setting CLUSTER_NODES to rhel72 Setting CRS_HOME to false Setting oracle.installer.invPtrLoc to /tmp/deinstall2019-04-04_02-50-37PM/oraInst.loc Setting oracle.installer.local to true ## [END] Preparing for Deinstall ## Setting the force flag to false Setting the force flag to cleanup the Oracle Base Oracle Universal Installer clean START Detach Oracle home '/opt/app/oracle/product/18.x.0/dbhome_1' from the central inventory on the local node : Done Delete directory '/opt/app/oracle/product/18.x.0/dbhome_1' on the local node : Done The Oracle Base directory '/opt/app/oracle' will not be removed on local node. The directory is in use by Oracle Home '/opt/app/18.x.0/grid'. Oracle Universal Installer cleanup was successful. Oracle Universal Installer clean END ## [START] Oracle install clean ## ## [END] Oracle install clean ## ######################### DEINSTALL CLEAN OPERATION END ######################### ####################### DEINSTALL CLEAN OPERATION SUMMARY ####################### Successfully detached Oracle home '/opt/app/oracle/product/18.x.0/dbhome_1' from the central inventory on the local node. Successfully deleted directory '/opt/app/oracle/product/18.x.0/dbhome_1' on the local node. Oracle Universal Installer cleanup was successful. Review the permissions and contents of '/opt/app/oracle' on nodes(s) 'rhel72'. If there are no Oracle home(s) associated with '/opt/app/oracle', manually delete '/opt/app/oracle' and its contents. Oracle deinstall tool successfully cleaned up temporary directories. ####################################################################### ############# ORACLE DEINSTALL TOOL END #############

5. The last step is to remove the clusterware from the node. Check if the node to be deleted is active and unpinned. If the node is pinned then unpin it with crsctl unpin command. Following could be run as either grid user or root.

olsnodes -s -t rhel71 Active Unpinned rhel72 Active Unpinned6. Similar to database software removal, there's no inventory update to remove the clusterware. Run deinstall with local option on the node to be deleted.

cd $GI_HOME/deinstall ./deinstall -local

Checking for required files and bootstrapping ... Please wait ... Location of logs /tmp/deinstall2019-04-04_03-00-47PM/logs/ ############ ORACLE DECONFIG TOOL START ############ ######################### DECONFIG CHECK OPERATION START ######################### ## [START] Install check configuration ## Checking for existence of the Oracle home location /opt/app/18.x.0/grid Oracle Home type selected for deinstall is: Oracle Grid Infrastructure for a Cluster Oracle Base selected for deinstall is: /opt/app/oracle Checking for existence of central inventory location /opt/app/oraInventory Checking for existence of the Oracle Grid Infrastructure home /opt/app/18.x.0/grid The following nodes are part of this cluster: rhel72,rhel71 Checking for sufficient temp space availability on node(s) : 'rhel72' ## [END] Install check configuration ## Traces log file: /tmp/deinstall2019-04-04_03-00-47PM/logs//crsdc_2019-04-04_03-02-05-PM.log Network Configuration check config START Network de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/netdc_check2019-04-04_03-02-11PM.log Network Configuration check config END Asm Check Configuration START ASM de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/asmcadc_check2019-04-04_03-02-11PM.log Database Check Configuration START Database de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/databasedc_check2019-04-04_03-02-11PM.log Oracle Grid Management database was found in this Grid Infrastructure home Database Check Configuration END ######################### DECONFIG CHECK OPERATION END ######################### ####################### DECONFIG CHECK OPERATION SUMMARY ####################### Oracle Grid Infrastructure Home is: /opt/app/18.x.0/grid The following nodes are part of this cluster: rhel72,rhel71 The cluster node(s) on which the Oracle home deinstallation will be performed are:rhel72 Oracle Home selected for deinstall is: /opt/app/18.x.0/grid Inventory Location where the Oracle home registered is: /opt/app/oraInventory Option -local will not modify any ASM configuration. Oracle Grid Management database was found in this Grid Infrastructure home Oracle Grid Management database will be relocated to another node during deconfiguration of local node Do you want to continue (y - yes, n - no)? [n]: y A log of this session will be written to: '/tmp/deinstall2019-04-04_03-00-47PM/logs/deinstall_deconfig2019-04-04_03-01-58-PM.out' Any error messages from this session will be written to: '/tmp/deinstall2019-04-04_03-00-47PM/logs/deinstall_deconfig2019-04-04_03-01-58-PM.err' ######################## DECONFIG CLEAN OPERATION START ######################## Database de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/databasedc_clean2019-04-04_03-02-11PM.log ASM de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/asmcadc_clean2019-04-04_03-02-11PM.log ASM Clean Configuration END Network Configuration clean config START Network de-configuration trace file location: /tmp/deinstall2019-04-04_03-00-47PM/logs/netdc_clean2019-04-04_03-02-11PM.log Network Configuration clean config END Run the following command as the root user or the administrator on node "rhel72". /opt/app/18.x.0/grid/crs/install/rootcrs.sh -force -deconfig -paramfile "/tmp/deinstall2019-04-04_03-00-47PM/response/deinstall_OraGI18Home1.rsp" Press Enter after you finish running the above commands <----------------------------------------

# /opt/app/18.x.0/grid/crs/install/rootcrs.sh -force -deconfig -paramfile "/tmp/deinstall2019-04-04_03-00-47PM/response/deinstall_OraGI18Home1.rsp" Using configuration parameter file: /tmp/deinstall2019-04-04_03-00-47PM/response/deinstall_OraGI18Home1.rsp The log of current session can be found at: /tmp/deinstall2019-04-04_03-00-47PM/logs/crsdeconfig_rhel72_2019-04-04_03-04-5 7PM.log CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rhel72' CRS-2673: Attempting to stop 'ora.crsd' on 'rhel72' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on server 'rhel72' CRS-2673: Attempting to stop 'ora.chad' on 'rhel72' CRS-2673: Attempting to stop 'ora.DATA.dg' on 'rhel72' CRS-2673: Attempting to stop 'ora.OCRDG.dg' on 'rhel72' CRS-2673: Attempting to stop 'ora.FRA.dg' on 'rhel72' CRS-2677: Stop of 'ora.DATA.dg' on 'rhel72' succeeded CRS-2677: Stop of 'ora.OCRDG.dg' on 'rhel72' succeeded CRS-2677: Stop of 'ora.FRA.dg' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.GIMRDG.dg' on 'rhel72' CRS-2677: Stop of 'ora.GIMRDG.dg' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'rhel72' CRS-2677: Stop of 'ora.asm' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.ASMNET1LSNR_ASM.lsnr' on 'rhel72' CRS-2677: Stop of 'ora.chad' on 'rhel72' succeeded CRS-2677: Stop of 'ora.ASMNET1LSNR_ASM.lsnr' on 'rhel72' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rhel72' has completed CRS-2677: Stop of 'ora.crsd' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'rhel72' CRS-2673: Attempting to stop 'ora.crf' on 'rhel72' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rhel72' CRS-2673: Attempting to stop 'ora.mdnsd' on 'rhel72' CRS-2677: Stop of 'ora.drivers.acfs' on 'rhel72' succeeded CRS-2677: Stop of 'ora.crf' on 'rhel72' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'rhel72' succeeded CRS-2677: Stop of 'ora.asm' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rhel72' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.ctssd' on 'rhel72' CRS-2673: Attempting to stop 'ora.evmd' on 'rhel72' CRS-2677: Stop of 'ora.evmd' on 'rhel72' succeeded CRS-2677: Stop of 'ora.ctssd' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'rhel72' CRS-2677: Stop of 'ora.cssd' on 'rhel72' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'rhel72' CRS-2673: Attempting to stop 'ora.gpnpd' on 'rhel72' CRS-2677: Stop of 'ora.gpnpd' on 'rhel72' succeeded CRS-2677: Stop of 'ora.gipcd' on 'rhel72' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rhel72' has completed CRS-4133: Oracle High Availability Services has been stopped. 2019/04/04 15:08:05 CLSRSC-4006: Removing Oracle Trace File Analyzer (TFA) Collector. 2019/04/04 15:08:43 CLSRSC-4007: Successfully removed Oracle Trace File Analyzer (TFA) Collector. 2019/04/04 15:09:11 CLSRSC-336: Successfully deconfigured Oracle Clusterware stack on this node

7. From the node remaining in the cluster run the node deletion command as root

# crsctl delete node -n rhel72 CRS-4661: Node rhel72 successfully deleted.8. Use cluvfy to verify node deletion.

cluvfy stage -post nodedel -n rhel72 Verifying Node Removal ... Verifying CRS Integrity ...PASSED Verifying Clusterware Version Consistency ...PASSED Verifying Node Removal ...PASSED Post-check for node removal was successful. CVU operation performed: stage -post nodedel Date: 04-Apr-2019 15:12:44 CVU home: /opt/app/18.x.0/grid/ User: gridThis conclude the node deletion on 18c RAC.

Related Post

Deleting a Node From 12cR1 RAC

Deleting a Node From 11gR2 RAC

Deleting a 11gR1 RAC Node